The advent of Cloud and Container technology ushered a new era in distributed computing at “planet scale” which was unheard of and unimaginable just a decade ago. Another interesting movement was brewing up a decade ago which bolstered delivering these complex solutions at high speed and accuracy, DevOps. These two paradigm shifts have gone hand in hand complementing each other to shape up distributed computing in the way we know (or are still learning about) today.

We all know how integral Continuous Integration and Continuous Deployment are to the DevOps automation paradigm and how organizations have designed verbose pipelines so as to bring a factory floor model into shipping software.

If you’ve ever been part of implementing any of these DevOps practices for a cloud native distributed system, you’d perhaps know how quickly these CI/CD pipelines become a cacophony of complex tools and integrations requiring their own sub organization of specialists to be built and maintained, thereby adding to the very silos that the practices had set out to break.

A large system that’s composed of multiple distributed sub systems and is usually deployed as docker containers clustered over an orchestration runtime such Kubernetes; and for those systems these pipelines come anything but easy. The problem is you’d, at any time, be dealing with at-least 5 tools for anything from Triggers, to running builds and packaging, creating test environments and running tests and finally the holy grail of one click deploy (if it is any Holy at all!).

Some good people at the Knative project with Google felt the aforementioned problem deep enough to come up with a solution that (I believe) is one of the best attempts at building shift left pipelines that yet exists; Tekton.

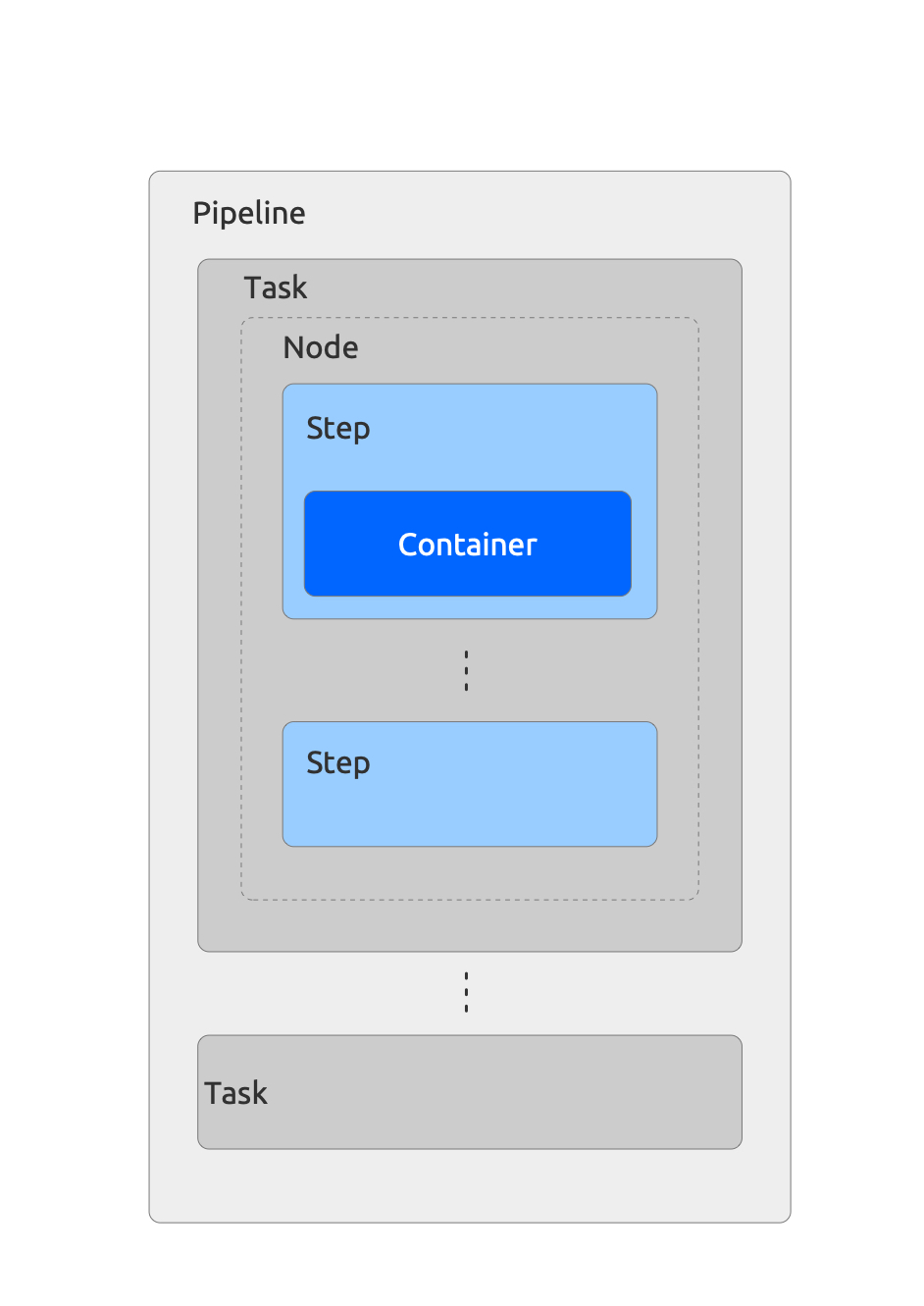

Tekton aims at bringing much needed simplicity and uniformity in creating and running these pipelines by providing a high reusable, declarative, component based cloud native build system which utilizes Kubernetes CRDs to get the job done. In the Tekton philosophy any pipeline can be broken down to the following three key parts

The vision therefore is to cut through the inconsistency and complexity so as to provide a mechanism of building pipelines that is easy to write, maintain and is reusable across builds running on the same build-tool/lanaguage/frmaework combination.

Tekton defines resources which fulfill the characteristics shown above thereby letting you concentrate on the What needs to be done and when leaving the how to the underlying implementation. Let’s look at key building blocks of a Pipeline created with Tekton.

The most basic of Tekton components are the steps, essentially a Kubernetes container spec which is an existing resource type lets you define an image and the information you need to run it. For example:

steps:

- image: ubuntu # contains bash

script: |

#!/usr/bin/env bash

echo "Hello from Bash!"

A Task is composed of one or more steps (you can have a as granular or fine tasks as you wish) and is a unit of work in a pipeline that achieves a specific goal (built jar archive, docker image, test run etc.). The following task runs a maven build for example:

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: mvn

spec:

workspaces:

- name: output

params:

- name: GOALS

description: The Maven goals to run

type: array

default: ["package"]

steps:

- name: mvn

image: gcr.io/cloud-builders/mvn

workingDir: /workspace/output

command: ["/usr/bin/mvn"]

args:

- -Dmaven.repo.local=$(workspaces.maven-repo.path)

- "$(inputs.params.GOALS)"

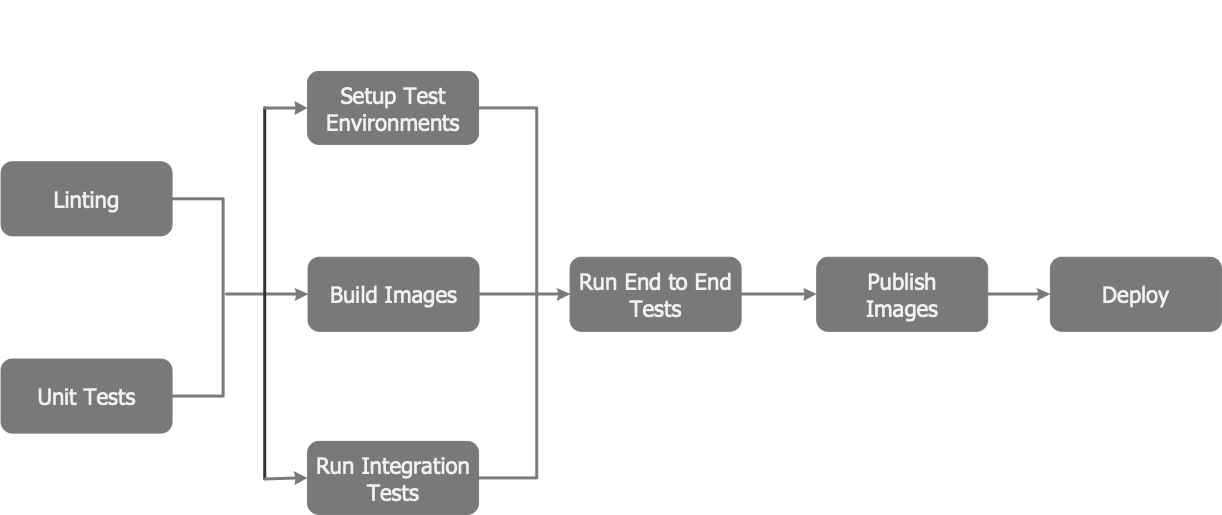

A Pipeline is a collection of Tasks that you define and arrange in a specific order of execution as part of your continuous integration flow. Each Task in a Pipeline executes as a Pod on your Kubernetes cluster. You can configure various execution conditions to fit your business needs. Pipelines can be both the workflow of part of workflow as you desire. Here’s a diagrammatic representation of what a pipeline would achieve in Tekton.

Let’s look at what we would expect from a pipeline to run for most of modern day projects:

That’s where lies the power of this tool, being able to author any umber of pipelines without having to integrate multiple tools or manage complex orchestration. This thorough DRY approach to automated CI/CD pipeline is certainly a great tool at the disposal of software development teams.

Now that we’ve seen what Tekton is all about the the promise it brings on the table, let’s see how well it lives up to it.

To install the core component of Tekton (assuming you have a kube cluster up and running already, if not install the kube cluster first), Tekton Pipelines, run the command below:

kubectl apply --filename https://storage.googleapis.com/tekton-releases/pipeline/latest/release.yaml

It may take a few moments before the installation completes. You can check the progress with the following command:

kubectl get pods --namespace tekton-pipelines

Confirm that every component listed has the status Running.

To run a CI/CD workflow, you need to provide Tekton a Persistent Volume for storage purposes. Tekton requests a volume of 5Gi with the default storage class by default. Your Kubernetes cluster, such as one from Google Kubernetes Engine, may have persistent volumes set up at the time of creation, thus no extra step is required; if not, you may have to create them manually. Alternatively, you may ask Tekton to use a Google Cloud Storage bucket or an AWS Simple Storage Service (Amazon S3) bucket instead. Note that the performance of Tekton may vary depending on the storage option you choose.

kubectl create configmap config-artifact-pvc \

--from-literal=size=10Gi \

--from-literal=storageClassName=manual \

-o yaml -n tekton-pipelines \

--dry-run=client | kubectl replace -f -

For more specific details on the Installation and configuration of Tekton you may refer to their documentation.

In this post we saw what Tekton brings on the table in terms of providing a way to author highly scalable pipelines built from reusable tasks and how to quickly get it up and running on a kubernetes cluster. In the next part we will look into building and running a pipeline on Tekton for a simple java application.